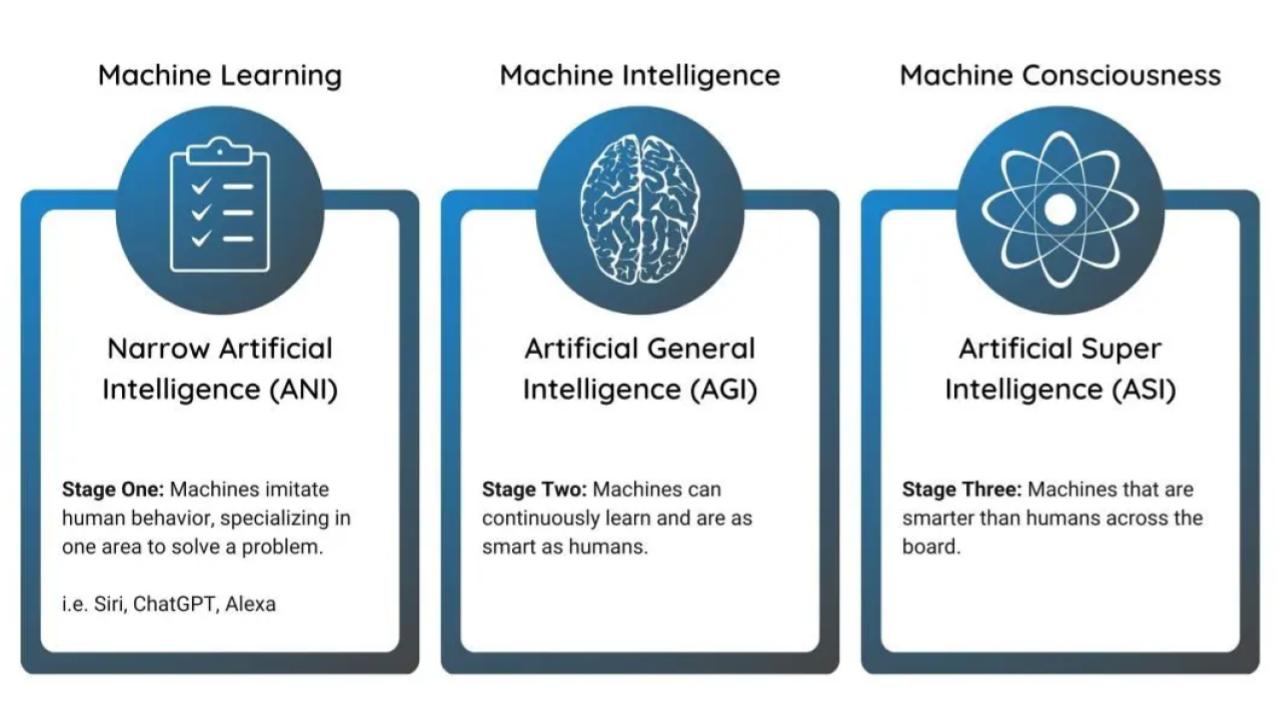

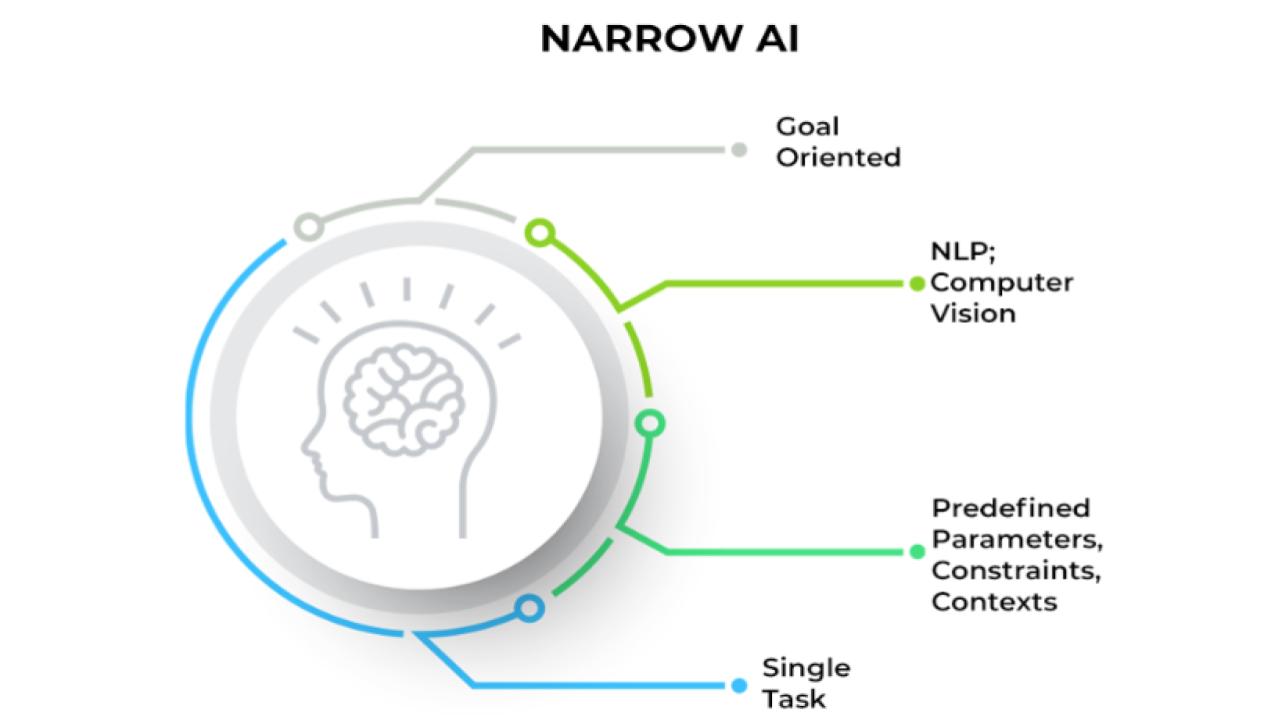

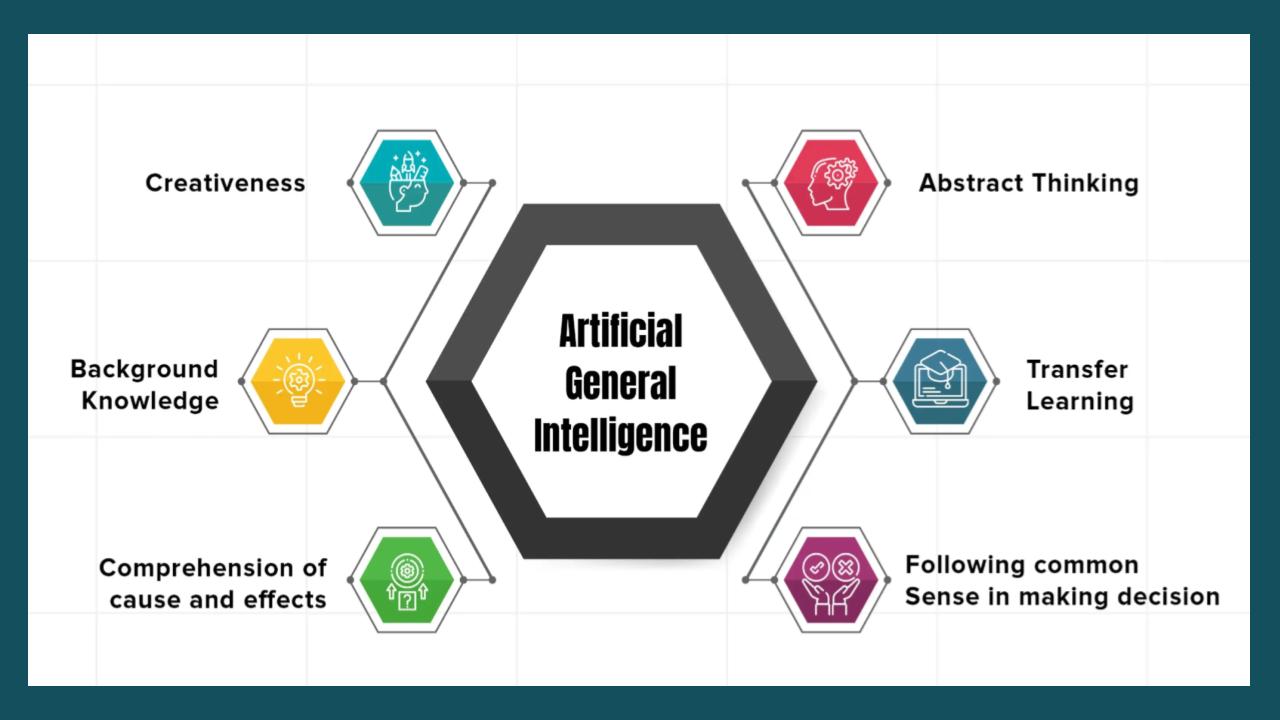

The Artificial Intelligence onAir network of hubs is focused on the three types of AI- ANI or Artificial Narrow Intelligence; AGI or Artificial General Intelligence; and ASI or Artificial Super Intelligence. The central hub for this network is at ai.onair.cc. The first sub-hub in the network is the AI Policy hub.

If you or your organization would like to curate an AI-related Hub or a post within this hub (e.g. a profile post on your organization), contact ai.curators@onair.cc.

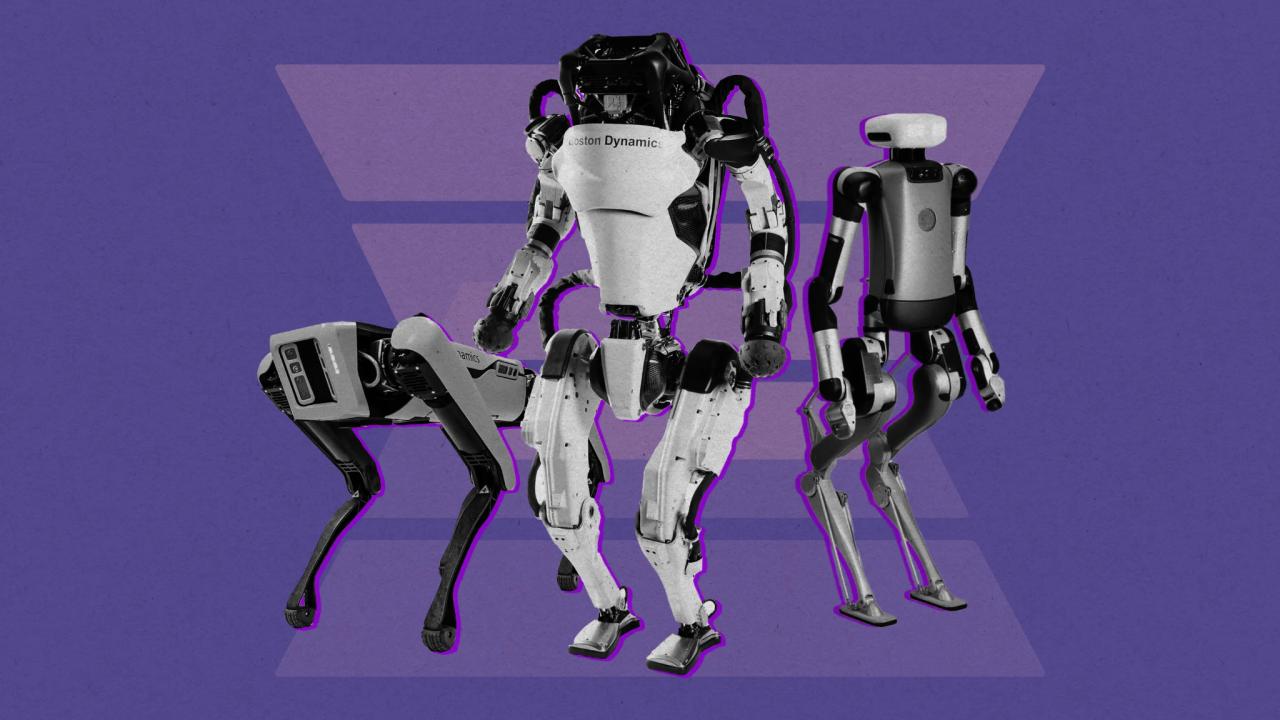

OnAir Post: AI onAir Network of Hubs